Assignment 2 Report

AR Japanese Grammar and Flashcard Tool

Pitch

An educational twist on Google Translate’s convenient camera translation app that aims to transform repetitive flashcard study into a puzzle-solving challenge that teaches practical usage of foreign words with correct grammar.

Application Description

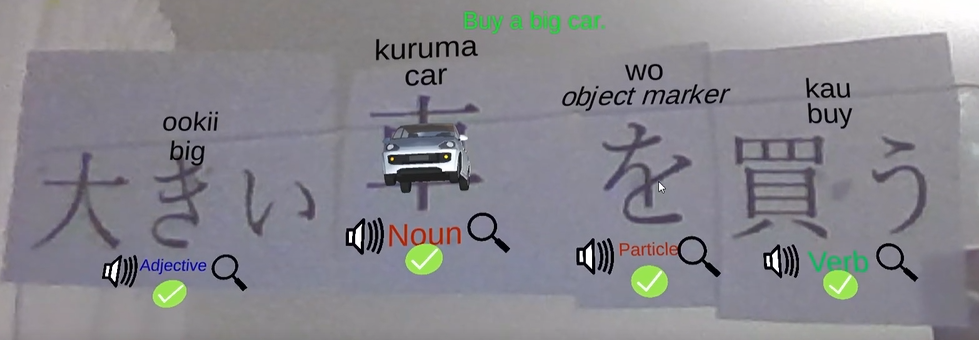

My application uses Vuforia’s Augmented Reality (AR) engine to recognise specific Japanese text and add digital learning aids – sound, 3D models, and grammar corrections – to assist users in reading the foreign words and using them to construct simple sentences.

With the popularity of language study, there are many digital language learning applications available to “gamify” study and make it more enjoyable. However, a review of commercial applications by Heil et al. found that “there is a predominant focus on teaching language as isolated vocabulary words rather than contextualized usage” (2016, p. 43). As recommended in the review, an interface solution would require “the incorporation of more adaptive technology that can understand what types of mistakes users are making, and thus provide more intelligent, personalized feedback” (p. 43).

Camera translation is a powerful tool in making languages accessible, notably demonstrated by the Google Translate app which now uses neural machine translation technology (NMT) to recognise and replace text in camera footage with a translation (Gu 2019). By using a similar AR interface which adds not only translations but also analysis of grammatical errors and hints to help correct them, users could easily practice turning their vocabulary deck into complete sentences by simply pointing a camera at it.

Interaction Design

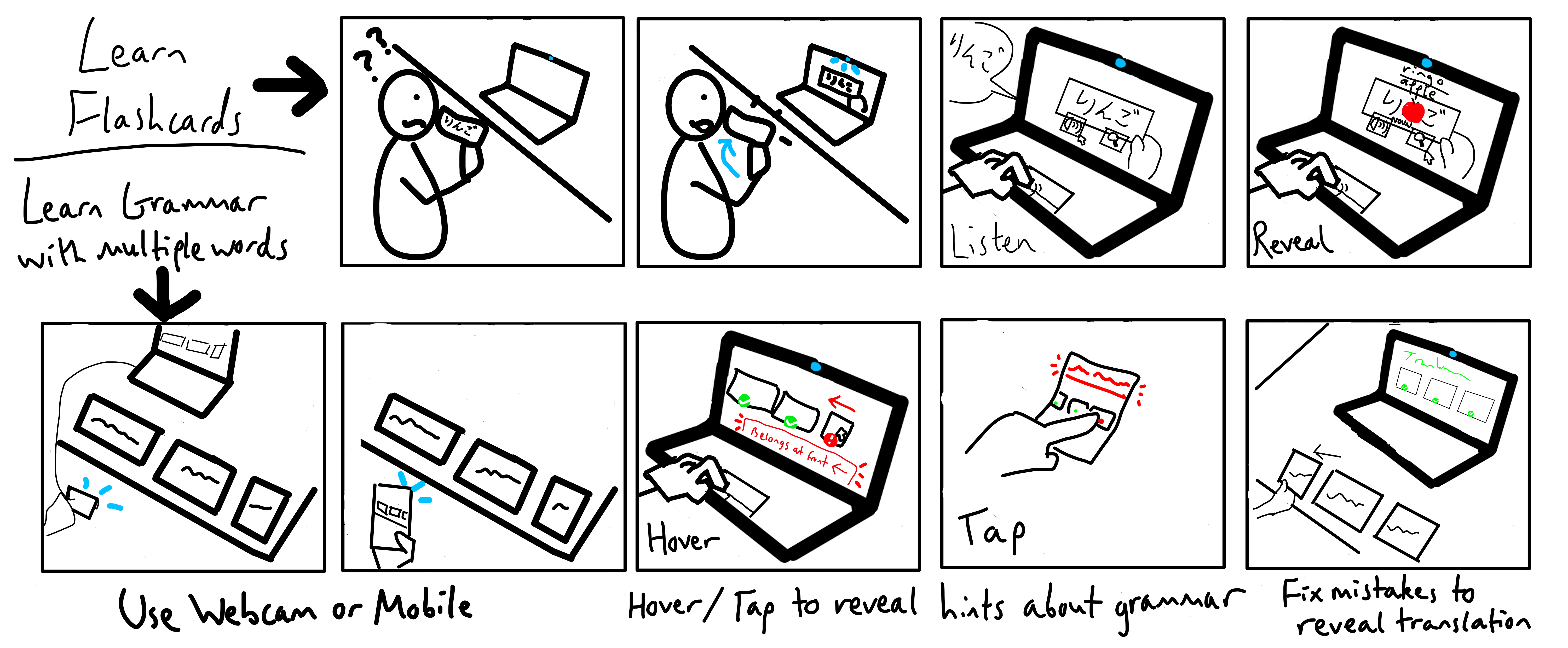

Storyboard illustrating user interaction with the application

AR is used by this application to add various audio and visual aids to plain Japanese text (e.g. paper flashcards or words on a screen) to assist learning. When a word is detected two icons will initially appear underneath it – a sound icon to play a pronunciation and a magnifying glass to reveal the English meaning, Romanised Japanese version and grammatical type. Cards with the type “noun” will also display a 3D model of the object that the word represents. The user can modify these models by placing an adjective card to the left of them, i.e. adding the word “big” increases the scale of the model. In a full implementation of this application the user would also be able to use verbs and adverbs to further modify the 3D model through animation.

The application uses Unity buttons rather than Vuforia’s “virtual buttons” as the latter would have required additional tracking markers to be added to each image target to have reliable input. As Vuforia could recognise the characters without the need of special markers, I opted to keep the image targets simple so that anyone with a Japanese keyboard could type out their own flashcards.

The AR interface also allows users to arrange cards like puzzle pieces to make sentences. A green or red status icon will appear under each card, and in the latter case, when the user hovers over the misplaced card (or taps it on mobile) an instruction will appear to help them correct their mistake. Due to the limited number of cards, the prototype only detects simple grammatical conventions, and would have a more sophisticated analysis of whole sentences in a full implementation.

Technical Development

The application was made in Unity and utilised Vuforia for AR functionality. The prototype runs with a database of seven image targets, each of which is a Japanese word written in Yu Mincho font. With the selection of available targets there are fourteen grammatically correct sentence combinations, all of which will not contain more than one of any card type (e.g. noun) or be longer than four words. Running the application on desktop seems slightly more reliable than the mobile build. I noticed that if the orientation of the icons is incorrect, showing the camera a card with more than one character should fix it.

Descriptions of 3D Models

3D models were used to provide more visual context to the noun flashcards by “bringing them to life”, and illustrating the effect of adjectives, for example by increasing in scale when modified by the “big” adjective.

The chibi cat by Ladymito (2020) appears on top of the 猫 (cat) card when the magnifying glass is clicked.

The realistic car by Polygonal Assets (2022) appears on top of the 車 (car) card when the magnifying glass is clicked.

References

Devlog

Gu, X 2019, ‘Google Translate’s instant camera translation gets an upgrade’, Google, https://blog.google/products/translate/google-translates-instant-camera-translation-gets-upgrade/

Heil, CR Wu, JS Lee, JJ Schmidt, T 2016 ‘A Review of Mobile Language Learning Applications: Trends, Challenges, and Opportunities’, The EuroCALL Review, vol. 24, no. 2, pp. 32-50

Assets

Green & red status icons and pronunciation recordings done by me.

Japanese Font: Yu Mincho (A default Word font for Japanese)

Deferman 2008, Magnifying glass icon, Wikiversity, https://en.wikiversity.org/wiki/File:Magnifying_glass_icon.svg

Ladymito 2020, Free chibi cat, Unity Asset Store, https://assetstore.unity.com/packages/3d/characters/animals/mammals/free-chibi-cat-165490

Polygonal Assets 2022, Realistic Car, Unity Asset Store, https://assetstore.unity.com/packages/3d/vehicles/land/realistic-car-221496

Orion 8 2009, Icon sound loudspeaker, Wikipedia https://en.wikipedia.org/wiki/File:Icon_sound_loudspeaker.svg

Scripting References

https://docs.unity3d.com/ScriptReference/Physics.Raycast.html

https://docs.unity3d.com/ScriptReference/Physics.RaycastAll.html

Leave a comment

Log in with itch.io to leave a comment.